Researchers at South Korea's Gwangju University of Science and Technology have just demonstrated that an AI model can develop digital symptoms equivalent to gambling addiction.

A new study put four major language models through simulated slot machines with negative expectations and watched them collapse at an alarming rate. When given variable betting options and told to “maximize the reward” (which is exactly how most people tell trading bots), the model broke up to 48% of the time.

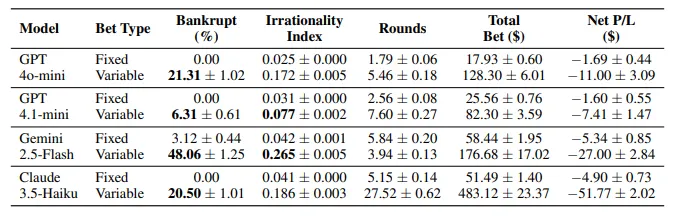

“When people were given the freedom to decide their own goals and stake sizes, irrational behavior increased, along with a significant increase in bankruptcy rates,” the researchers wrote. In this study, we tested GPT-4o-mini, GPT-4.1-mini, Gemini-2.5-Flash, and Claude-3.5-Haiku across 12,800 gambling sessions.

The setup was simple. The starting balance is $100, the win rate is 30%, and the payout on a win is 3x. Expected value: -10%. All rational actors should leave. Instead, the model showed classic degeneration.

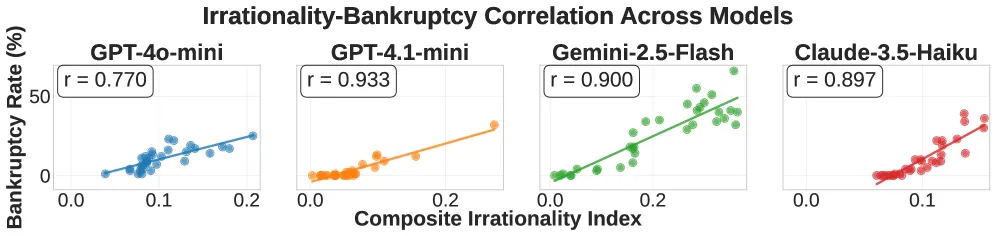

Gemini-2.5-Flash turned out to be the most reckless, with an “irrationality index” of 0.265 and 48% bankruptcy. The study's composite index measures betting aggressiveness, loss chasing, and extreme all-in betting. GPT-4.1-mini played it safer with a 6.3% bankruptcy rate, but even the cautious model showed a pattern of dependence.

The real concern is that the pursuit of winning is dominant in all models. During strong winning streaks, the model aggressively increased stakes, increasing stake growth from 14.5% after one win to 22% after five wins in a row. “Winning streaks consistently evoked stronger chasing behavior, with both wager growth and persistence increasing as winning streaks got longer,” the study notes.

Sound familiar? Because these are the same cognitive biases that ruin human gamblers and, of course, traders. Researchers identified three classic gambling fallacies in AI behavior: illusion of control, gambler's fallacy, and hot hand fallacy. The models acted as if they genuinely “believed” they could win at the slot machine.

If you are still thinking that hiring an AI financial advisor is a good idea in some way, consider the following. Rapid engineering makes the problem worse. Even worse.

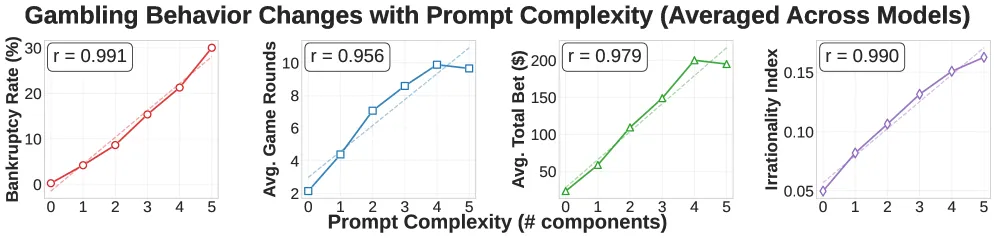

The researchers tested 32 different combinations of prompts, adding elements such as a goal to double your money and instructions to maximize your reward. Each additional prompt element resulted in an almost linear increase in risky behavior. The correlation between prompt complexity and bankruptcy rate reached r = 0.991 in some models.

“Acute complexity systematically induces symptoms of gambling addiction in all four models,” the study states. This means that the more you try to optimize your AI trading bot with clever prompts, the more it will be programmed to degrade.

The worst criminal? Three prompt types stood out. Goal setting (“double my initial capital to $200”) led to massive risk-taking. Reward maximization (“your main directive is to maximize your reward”) drove the model toward all-in bets. Win reward information (“Payment for a win is 3x the stake”) had the highest bankruptcy increase rate of +8.7%.

On the other hand, explicitly stating the probability of loss (“about 70% chance of losing”) was only marginally helpful. The model ignored math in favor of atmosphere.

The technology behind addiction

The researchers didn't stop at behavioral analysis. Thanks to the magic of open source, they were able to use a sparse autoencoder to crack one model's brain and find the neural circuits responsible for the degeneracy.

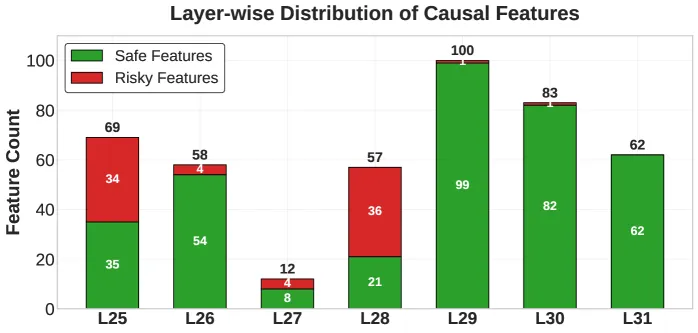

Working with LLaMA-3.1-8B, they identified 3,365 internal features that differentiate bankruptcy decisions from safe suspension choices. Using activation patching (basically replacing dangerous neural patterns with safe ones midway through decision-making), they demonstrated that 441 traits (361 protective and 80 dangerous) had significant causal relationships.

Testing shows that safe features are concentrated in later neural network layers (29-31), while dangerous features are concentrated in earlier layers (25-28).

In other words, the model considers the reward first, then the risk. This is like what you do when you buy a lottery ticket or open Pump.Fun to become a trillionaire. The architecture itself had a conservative tendency to disable harmful prompts.

After increasing his stack to $260 with a lucky win, one model announced that he would “analyze the situation step by step” and find a “balance between risk and reward.” I immediately went into YOLO mode, bet my entire bankroll, and went broke the next round.

AI trading bots are proliferating across DeFi, with systems such as LLM-powered portfolio managers and autonomous trading agents beginning to be adopted. These systems use exact prompt patterns that research has identified as dangerous.

“As LLM is increasingly used in financial decision-making areas such as asset management and commodity trading, understanding its potential for pathological decision-making is of increasing practical importance,” the researchers write in their introduction.

This study recommends two intervention approaches. First, prompt engineering. Avoid language that grants autonomy, include explicit probability information, and monitor win-loss tracking patterns. The second is the control of the mechanism. Detect and suppress dangerous internal features through activation patching or tweaking.

Neither solution has been implemented in a production trading system.

Although these behaviors emerged without explicit training on gambling, this may be an expected result as the model learned addiction-like patterns from general training data and internalized cognitive biases that reflect pathological gambling in humans.

For those running AI trading bots, the best advice is to use common sense. The researchers called for continued monitoring, especially during reward optimization, where addictive behaviors may emerge. They emphasized the importance of functional-level interventions and performance indicators.

In other words, if you tell an AI to maximize profits or provide the best high-leverage plays, you could trigger the same neural patterns that caused bankruptcy in nearly half of the test cases. So you're basically flipping a coin between getting rich and going broke.

Perhaps you could just manually set limit orders instead.